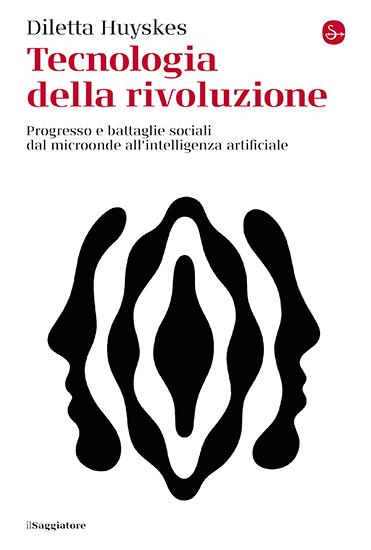

What do bicycles and artificial intelligence have in common? They discriminate. They were designed to do so, but few oppose it. Most drop their eyes and shoulders and … pedal, or just sit behind the desk. In any case, they look on, as models like Chat GPT choose from which point of view we should see the world – “instead of paying attention to the design and planning of technologies, because this software reproduces and amplifies status. Discrimination included”. At least this is what Diletta Huyskes thinks, a researcher in the field of Ethics of Technologies and Artificial Intelligence and author of “Tecnologia della Rivoluzione” (Technology of the Revolution, published by Il Saggiatore, 2024), a book that deals with this very topic.

She starts with the bicycle, not initially designed to be used by women, and then looks at today’s inequalities, those hidden but present in our daily experiences. There are many, but the problem is that “the entire history of technological innovation has led us to believe that, by delegating to a machine or by automating, the human being no longer has any responsibility.” That is not true, but with AI and ChatGPT, this seems to be happening again.

The more technology advances, the more difficult it is to understand its role as a powerful amplifier and accelerator of inequalities

Awareness of technological discrimination.

The more technology advances, the more difficult it is to understand its role as a powerful amplifier and accelerator of inequalities. To be able to grasp this, it needs to be tackled from a broad perspective. “There is a lot of work to do,” admits Huyskes, “and it starts with the data. If the training data is not very representative, the AI system will produce partial and potentially discriminatory results.” By “potentially” applying this to the decisions of public administrations and governments when they automate some services for citizens, the impacts… are unimaginable. And we don’t need to imagine them, just look at the Netherlands, Where sensitive data was illegally retained, incorrectly stored and processed and used to falsely identify subjects deemed to be a high risk of committing fraud, depriving them of child benefits. Or the United States, where predictive justice “decides” that black people have and will always have a greater risk of reoffending.

“When governments and the State deal with technologies that affect us as citizens, influencing our lives, it is essential that active protest groups arise. In the 70s and 80s it happened, in the United States and in Holland it happened recently, but not in Italy. There has not been a scandal so far to awaken consciences, but do we really need a scandal to realize what is happening?” Perhaps it would be enough to never take technologies for granted and inevitable (as she herself explains in her book) but to always locate them and evaluate the risks that they can entail for certain communities and societies.

A participatory (re)action

This requires a change of mentality and an assumption of responsibility, it is not a question of skills. According to Huskes, “what is needed is a civil society and a culture that can grasp and make citizens understand the practical effects of a discriminating technology. A choral, ecosystemic and structural effort is needed to move from the technological level to the social and political level”.

Today they seem interconnected and interchangeable levels, communicating vessels, but they communicate little and share even less. Making this transition therefore means making a great, revolutionary leap. To get a good run-up, we can start by thinking of the act of programming each algorithm as a political gesture. “In this way, we refocus the problem, clarifying that responsibility does not lie with the end users, but with those who hold control of these tools” explains Huyskes. With these words, she does not intend to relieve individuals, us, of responsibility. Indeed, she firmly believes that we need active interest groups that demand to be able to take part in decisions through participatory processes in which their voices can be heard.

Is it urgent? Yes. Even if something bad has not already happened? Yet? Yes. “It is a preventive action, to think about the risks first and do everything to reduce them. Let’s start, for example, by asking ourselves what impact the technology we are designing can have on people and society”. Dataset, type of model, indicators, weights: everything can be regulated in light of the effect it can have on society. The urgency of a preventive act is complex to perceive, it is difficult to imagine the effects that giving away one’s data to companies can have. “The problem is not understood: we often think that there are no risks just because ‘we have nothing to hide’ – she explains – but when citizens are profiled in an invasive way, if someone accesses the data and has a less than noble purpose, they find everything already ready”.

We often think that there are no risks just because 'we have nothing to hide' but when citizens are profiled in an invasive way, if someone accesses the data and has a less than noble purpose, they find everything already ready

Diletta HuyskesCollaboration between diversity

The “levers” to control new technologies are in the hands of humans then. Few humans, often white, rich and Western, but humans. People. This also applies to the black boxes like most AI models sit within. Without getting too indignant and wallowing in an immobile rage against the “big”, it is better to spread the word that making different conceptual or design choices is possible. It is better to look to the future, and to do so with companies, starting with those that design technology. “They are the most important subjects to whom this message should be conveyed” agrees Huyskes, describing her ideal team in the corporate environment: interdisciplinary.

“We often try to transform IT experts into hybrid figures, but when you have a certain type of background and mentality, you have certain objectives, different from those who have another type of role and training” she explains. We do not want to question the values and sensibilities of those who belong to the IT world: it is a question of design mission and objectives. IT efficiency almost never coincides with social efficiency. “Data scientists, computer scientists, engineers and programmers use technical criteria to understand if their artificial intelligence system works. They are based on feasibility and statistical satisfaction metrics – she adds – but this leaves a fundamental piece missing, that of the social efficiency of these reasonings”. Every time they tell us about a powerful, revolutionary, efficient technology, from now on it is better to ask ourselves “for whom? In what sense?”. And this also applies to the latest model of microwave. Or bicycle.